Beware the dark side of governments turning to AI

AI integration would make it difficult for your government to uphold its responsibilities towards you

Imagine you are flagged to lose your citizenship one day, without any explanation, just because of a single decision made by a black-box AI government software. This is not a far-fetched scenario but a reality if you are a naturalized immigrant in the US where the Department of Homeland Security (DHS) uses a software known as to flag immigrants to have their citizenship revoked. And what rules does the software use to determine whether an immigrant should be flagged? The DHS refuses to share any criteria behind its algorithm.

The world’s tech giants and the newer start-ups are all racing to become the leaders in Artificial Intelligence (AI). Subsequently, governments have seen the potential of AI and are looking to upgrade their processes through integration. Arnauld Bertrand writes in his that AI use in the public sector has the potential to optimize governments’ operations and deliver better outcomes for society. While this does have in domains such as surveillance, healthcare and transportation, the adoption of AI by democratic governments around the world could undermine the responsibilities they have towards the public.

Privacy

Presumably, you care about not letting your personal information get into the wrong hands. But since most governments do not have AI technologies developed yet, they resort to private organizations, which means that these organizations could have access to sensitive government data about you and millions of other citizens. A recent example is the dependence of governments on tech giants in hopes of beating back the pandemic. In 2020, the National Health Service (NHS) of Britain had to its stores of health data to corporations such as Microsoft, Amazon, Google, and 2 controversial AI firms, Faculty and Palantir. These companies had the opportunity to train their models using the personal data of NHS patients without their consent.

Since most governments do not have AI technologies developed yet, they resort to private organizations, which means that these organizations could have access to sensitive government data about you and millions of other citizens.

In addition, your government may also collect too much data about you in the name of surveillance, violating the fundamental human right of privacy. According to the , autocratic governments (such as Saudi Arabia, China, and Russia) are already using mass surveillance technologies to reinforce repression. 51 advanced democratic governments have also deployed AI surveillance technologies, and the risk of exploiting these is only based on their objectives.

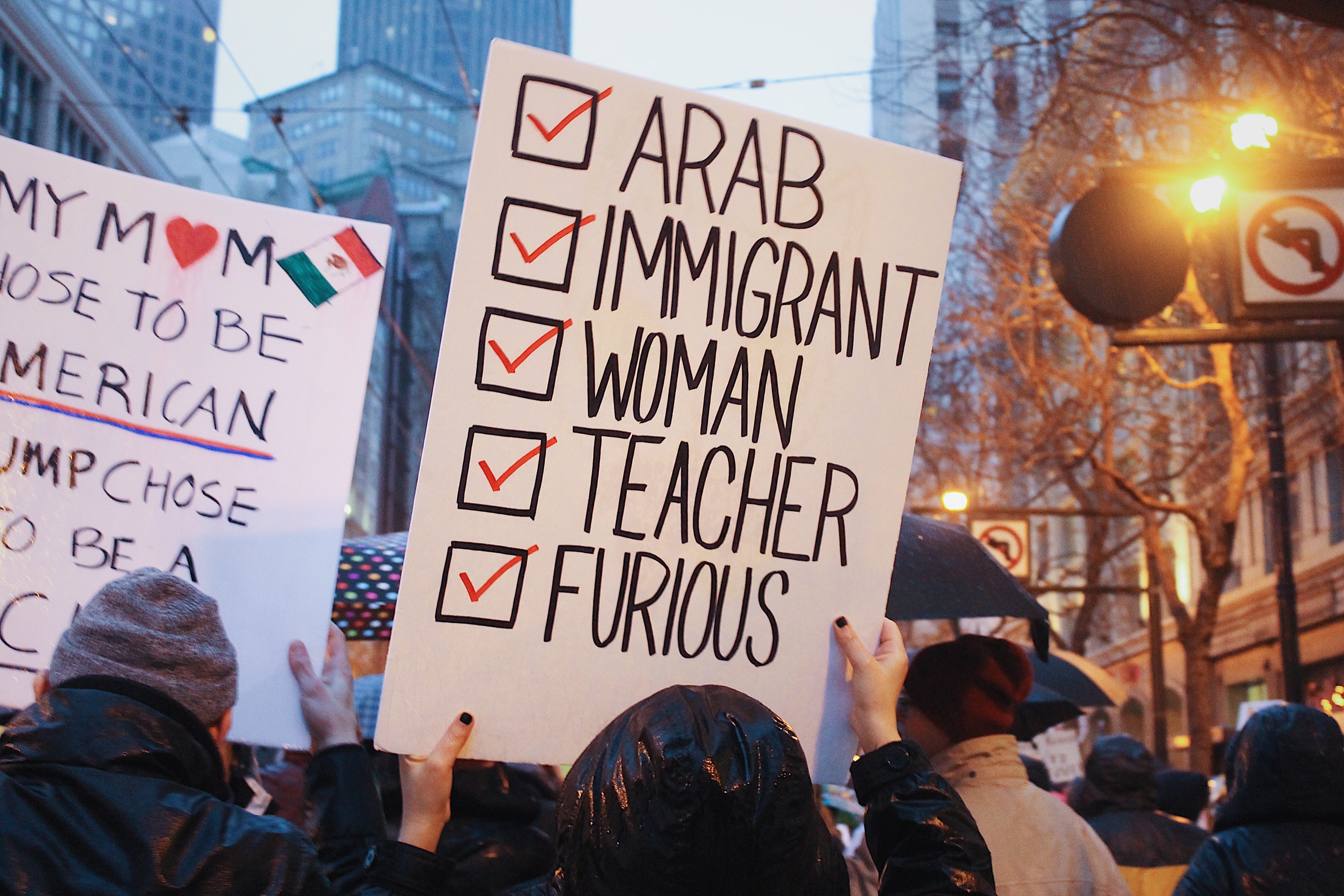

Fairness

Machine-learning models are usually trained with existing data sets, which embeds them with the data’s underlying social inequalities or maybe even the developers’ prejudice, causing their decisions to be against the interest of historically disadvantaged societies. For example, trained without diverse data sets could not understand female voices, and a woman who called customer service was told to give up and ask a man to set it up, further amplifying gender bias. According to a , citizens are afraid of such bias in judicial systems and are strongly against the use of AI in this sector.

Machine-learning models are embedded with the training data’s underlying social inequalities or maybe even the developers’ prejudice, causing their decisions to be against the interest of historically disadvantaged societies.

Transparency

Transparency can help mitigate these issues of fairness and can be achieved through explainable AI. However, complicated machine-learning algorithms such as neural networks sacrifice for power, performance, and accuracy. Governments using such algorithms will not be able to explain how their decisions are made, therefore reducing transparency. Consider the scenario we started off with, where immigrants were flagged by ATLAS. Muslim Advocates’ that since the screening criterion behind ATLAS is unknown, we cannot assess if it is disproportionately flagging people from certain communities.

Complicated machine-learning algorithms such as neural networks sacrifice explainability for power, performance, and accuracy.

Accountability

AI can draw and enforce its own decisions. However, if an AI algorithm used by the government makes a false prediction, who is to be held responsible for it? Is it the developer who created the algorithm, the government who employed it or the company which provided it? This ethical dilemma introduces a loophole for governments to not be held accountable for their decisions. So how can governments enforce accountability? IBM’s chief AI officer , for AI systems to be accountable, they need to be explainable, free of bias and should respect the privacy of the people it affects.

For AI systems to be accountable, they need to be explainable, free of bias and should respect the privacy of the people it affects.

Moving forward

To understand the decisions made by autonomous systems, human intervention is key. RenalTeam, a haemodialysis service provider, employs AI to predict the hospitalization risk of dialysis patients early, but since false outcomes could have negative impacts on a patient’s life, a is used so that licensed nurses have the final word on hospitalization. Similarly, having humans in the final decision-making system would better allow governments to uphold their responsibilities of fairness, transparency, accountability, and the privacy of citizens.

Ultimately, most of the issues discussed arise from the root cause of bias in AI systems. And this bias is representative of the existing bias in our society. While our governments strive to implement AI in the safest way possible, we need to address our unconscious bias and eliminate discrimination, as all AI can do today is mimic our decisions.

We need to address our unconscious bias and eliminate discrimination, as all AI can do today is mimic our decisions.

Photo by

Photo by  Photo by

Photo by  Photo by

Photo by  Photo by

Photo by  Photo by

Photo by